Automated vehicles (AVs) constitute a significant advancement in contemporary transportation systems. With their capacity to minimize traffic accidents, decrease congestion, and improve efficiency, AVs fundamentally depend on decision-making algorithms to operate and execute tasks independently. Essential to the operation of AVs is the process of instantaneous decision-making, which necessitates analyzing environmental information and forming decisions about driving actions in various scenarios. This paper evaluates the principal methods utilized in AV decision-making, investigating rule-oriented systems, machine learning (ML), reinforcement learning (RL), and combined approaches. It also considers the current challenges AVs encounter, such as instantaneous decision processing, safety, and principled decision-making. Furthermore, the review examines how AVs incorporate various decision-making elements, encompassing perception, planning, control, and interaction between vehicles. The development of decision-making in AVs is analyzed with emphasis on advanced reinforcement learning (DRL), interpretable artificial intelligence (XAI), and collaborative driving systems. This paper presents a thorough evaluation of the methods and challenges, yielding insights into the future direction of AV decision-making systems.

Self-driving cars hold great promise to the vehicle and transportation industries, and it is having a revolutionary impact on safety, productivity, and the opportunity to provide broader and more inclusive mobility options to our community. There is the brain, too: how will that brain integrate information from sits sensors (LIDAR, RADAR, ultra-sonic, cameras) in formulating an overtaking intention? Driverless cars (or as they are referred to in the industry “Autonomous Vehicles”: AVs) are likely to change the way people and goods are transported within and between cities, solving some of the ancient problems of traffic, accidents, pollution, run down of energy, and access for those who cannot drive, for whatever reasons, old age, disability etc. This transformative potential is predicated on the AV making good, safe, timely decisions while operating in complex and uncertain traffic scenarios. Contrary to conventional autos, which need to be driven by humans interpretation their sensory perceptions, transmitting of gathered experiences, intuitive judgment immediately, through logical inference, the AVs are a system matrix, which thinks. These are frameworks that constantly process huge amounts of data from multiple sensors in the car, such as LIDAR, radar, cameras, GPS and inertial measurement units, to help the vehicle create and maintain a rich, dynamic map of its environment. The steady stream of sensory data can be used to perceive and classify objects, understand road infrastructure, and understand vehicle behaviors of other road users as they occur. Decision-making represents the key operational aspect for the advancement and the operation of autonomous vehicles.

This is much more than just a matter of executing control commands, it constitutes a complex collaboration of several subsystems. Perception the perception modules interpret raw sensor readings in order to produce an accurate representation of the vehicle’s surrounding. Prediction systems anticipate the path and likely intentions of other agents—other cars, pedestrians or cyclists—and predict how the traffic scenario will progress over time. Planning algorithms subsequently select the most suitable and safe action based on various considerations, including collision avoidance, traffic laws conforming, passenger comfort, and general trip efficiency. Lastly, the control systems carry out these planned actions, directing acceleration, brakes and steering so that the vehicle’s response is smooth and safe. This holistic decision-making architecture enables self-driving cars to resemble human level driving, however they also frequently exceed them in terms of reliability, response time, and capacity to process tremendous volumes of data in parallel. While people can lose focus or feel tired, exhibit inconsistent judgment, AVs are not prone to such human experiences, instead, they strictly follow programmed safety mandates and react with precision to complicated and rapidly evolving driving conditions. In the same time, AVs can work quite well in different kinds of environment and traffic complexity (e.g., at a dense intersection in an urban area and meanwhile cruising the highway), and provides rich possibility of being applied in different scenarios, reshaping the mobility landscape worldwide [1,2].

At a fundamental level, decision-making in an AV involves the interpretation of traffic scenarios, which are complex, often dynamic, and may comprise other vehicles, pedestrians, traffic signals, and unforeseen circumstances. Human drivers largely decide their course of action based on intuition, experience, and sometimes heuristic shortcuts; however, such AVs rely on algorithmic logic based on formal modeling and data-driven approaches to make decisions prioritizing safety, efficiency, and compliance with traffic regulations. This paradigm shift from human-based to machine-based decision processes finds its basis on the merging of multi-modal sensor data and state-of-the-art algorithms capable of incorporating the uncertainties and variability innate in real-world driving [3–5].

The development of autonomous vehicle technology represents a paradigm shift in transportation with implications for safety, efficiency, and mobility. At the heart of this advancement are the decision-making systems, which have evolved from simple, rule-based methods to very adaptable systems that exploit artificial intelligence (AI), machine learning, and sensor fusion [6]. These systems allow for vehicles to not just perceive their environment, but also predict what might happen in the future and therefore plan a correct course of action, increasing their reliability in various traffic situations [7–10]. From a historical perspective, the process of employing effective decision-making processes for automated vehicles began publicly in the 1980s, 1990s, a critical period of research that catalyzed the current advancements in motor vehicle AV technologies. The Navlab program, established at Carnegie Mellon University and the European PROMETHEUS project (kicking off in 1994) were significant milestones in the early years as they were attempts to illustrate the capability of vehicles to drive on their own using combinations of inputs (camera inputs, ultrasonic sensor inputs, early radar) to be fused into a rule-based logic system to control the vehicle. The original goal of these projects were to allow vehicles to operate on a known route or structure with little to no interaction from a human operator.

One example of an early research platform employing computer vision together with sensor fusion for vehicle localization and obstacle detection is Navlab. Likewise, a large European consortium called PROMETHEUS eventually developed technologies conducive to highway autopilots (i.e., automated lane keeping, adaptive cruise control, and driver assistance). Both used deterministic, rule-based algorithms that acted on established if-then rules and logical structures, producing pathways for vehicle behavior as a set of “if this, then that” statements [11–13]. Rule-based methods were not difficult to implement, and they worked adequately in a structured or simplified environment—typically in either a closed test track or sparsely trafficked rural roadside. But issues with these methods bloomed quickly when facing a vehicle in real-world traffic [14]. Real-world traffic is unpredictable, dynamic, and uncertain—there are various interacting agents, traffic codes that are diverse and sometimes conflicting, road conditions that are variable, and events that occur spontaneously (e.g., pedestrian crossings and emergency vehicles). As it stands, deterministic rule-based systems find it challenging to scale to these uncertainties because they do not handle situations flexibly or deal adequately with the stochastics of other road users' states and actions. As an illustration, rule-based systems can outright fail entirely when presented with an unforeseen state that is not encoded. In response to these challenges, researchers shifted towards probabilistic and model-based frameworks that could explicitly represent uncertainty and variability. A key development in this area was the use of Markov decision processes (MDPs) to model decision-making as stochastic processes where outcomes are determined by both the current state of the environment and the agent’s action, so that (in principle) optimal policies can be defined to maximize long-term expected reward. However, as MDPs assume that the agent (driver) is aware of full state of the environment, they do not apply in scenarios with partial, missing, or noisy observations of the world (like real-world driving) [15]. In reaction to this weakness, researchers developed partially observable Markov decision processes (POMDPs). As the name implies, POMDPs generalize MDPs to account for partial observability (i.e., scenarios where the driver does not know the true state of the environment, and they must make decisions based upon probabilistic estimates from the observations taken through their sensors). POMDPs allow autonomous systems to capture uncertainties in perception and prediction, and make sequential decisions that weigh matters of immediate safety over achieving longer-term objectives or goals [16–18]. These frameworks represent the conceptual basis for more sophisticated decision-making algorithms that can address complex, dynamic traffic situations. The development of MDPs and POMDPs was a big improvement in the decision process for autonomous vehicles (AVs), allowing AVs to make decisions more robustly and flexibly. However, these methods face computational challenges because of the dimensionality of real-world driving environments, which has led to continued research into scalable approximations and real-time applications [19–22]. The development and application of more advanced sensor technologies like Light Detection and Ranging (LIDAR), radar, and high-resolution camera systems have significantly increased the sensing capabilities of AVs. AVs can use these sensor technologies to acquire environmental data to make good decisions. when they are combined through sensor fusion methods, the data that would be generated provides rich and accurate situational awareness for complex decision-making algorithms [23–31]. With the added individual sensor intelligence and monitoring capabilities, there has also been major progress in AI specifically with deep learning HCE, which added decision-making through data-driven methods with the capabilities to generalize across scenarios while using the dimensionalization of massive data sets to improve the predictive and reactive behaviors dynamically [32–36].

The decision-making frameworks found in autonomous vehicles employ a hierarchical structure, reflecting the complicated and layered nature of driving tasks. The hierarchy is appropriate and necessary for managing the vast numbers of decision points that an autonomous vehicle must traverse between high-level determinations about a route and decisions around the fine-grained control of a vehicle's motion. Within this layered decision need, the strategic element represents high-level planning that relates to route decisions or even navigation goals that will progress into the tactical and operational level. Strategic planning is optimally focused on finding an optimal route—including all the coordinated actions that may be necessary—from a point of origin to a point of destination, that's traversing a large and complex road network. Many of the strategic planning strategies utilize some form of graph search planning algorithm—e.g. Dijkstra's or A*—to explore the various paths while examining optimization strategies to determine the most efficient, shortest or safest path. Furthermore, the various tasks desired by an autonomous vehicle for strategic planning also need to incorporate real-world constraints of traffic volumes, road closures and travel time estimates, all of which makes the decision layer even more complicated [37–39]. Beneath strategic planning is the behavioral decision-making layer, which sits between the high-level navigation intent and the low-level vehicle control. Behavioral decision-making interprets the intentions and predicted behaviors of other road users (vehicle, pedestrian, cyclist) to make the informed decisions necessary to maneuver the vehicle within the traffic. That encompasses understanding and complying with traffic laws, regulations, and social norms prefatory to what is also regionally variable. Whereas an autonomous vehicle must decide when it is safe to change lanes, yield to crossing pedestrians, merge into traffic or yield to stoplights and traffic signs. Such decisions can have high uncertainty from noisy sensor data and unpredictable human complexity, and therefore utilize models for probabilistic reasoning and risk assessment to evaluate possibilities and manage for safety [40–43]. The final decision-making output is local motion planning. Previously, we have discussed strategic decision making and behavioral decision making. This local level integrates the previously made decisions into control commands in real time. Local motion planning outputs collision-safe trajectories the vehicle is able to follow while maximizing various objectives (collision safety, passenger comfort, energy consumption, etc.) and optimizing dynamic constraints (vehicle dynamics, roadway geometry). The local level is required to calculate smooth and safe paths to follow that can react quickly to changes in the environment such as a pedestrian walking into the roadway or an unforeseen emergency braking maneuver made by the vehicle ahead of the AV with regard to jerk and lateral acceleration in order to optimize the comfort level of the passengers [44–47]. These maneuvers have become increasingly dependent on data-driven learning approaches in recent years, particularly reinforcement learning (RL) and imitation learning approaches. Reinforcement learning can be used by AVs to learn decision making policies by using a simulation or testing in an environment, and the quick learning feedback a simulated environment allows them to receive using rewards and penalties when they are safe or unsafe with a trajectory profile respectively. Utilizing RL strategies allows the AV to choose the most appropriate behavior in complex and dynamic environments without requiring a manual process to generate patterns of safe driving. Imitation learning (e.g., learning from demonstrations) can be used in RL models so that the AV can learn through a demonstrated example of an expert (human) level driving behavior that can help to boost decision making policies, i.e., decrease learning time. Ultimately both RL and Imitation Learning can improve reliability and flexibility in AV decision-making.

In conclusion, this hierarchical decision-making framework—which encompasses strategic planning, behavioral decision-making, and local motion planning—affords a structured yet flexible method for autonomous vehicles to navigate the uncertainties of real-world driving tasks safely and efficiently. The modular structure allows for the incorporation of new technologies and algorithms while allowing for incremental improvement of the AVs capability [48–52]. Regardless of the progress made, autonomous vehicle decision-making systems still face various obstacles including the variability and unpredictable behavior of human drivers and pedestrians, the ethical challenges of making socially acceptable decisions, the real-time processing constraints that are imposed, the lack of transparency or explainability required for public acceptance or regulatory approval [53–56], as well as the different traffic regulations, cultural driving behaviors, and variations in infrastructure across regions. This requires adaptable and scalable decision-making frameworks that recognize and contextualize their practices according to these variances [57–60]. Emerging technologies such as vehicle-to-everything (V2X) communications, and collaborative multi-agent decision-making frameworks have the potential to address some of these hurdles by increasing the exchange of real-time information and capability for coordinated maneuvers between vehicles, V2X Go and infrastructure [61–63].

The purpose of this comprehensive review is to illustrate the evolution of decision-making systems in automation and autonomy for automobiles by synthesizing existing literature and techniques, highlighting important technology developments along the way, and emphasizing current challenges and future developments. The systematic analysis of foundational theories to current techniques supports a greater understanding of decision-making systems’ evolution to meet the accelerating demands of autonomous mobility in the complexity of real-world interactions.

There are a whole bunch of methods to assist in decision-making in AVs. Each one of them has its Plus and Minus. We shall elaborate on three main approaches: rule-based reasoning systems, machine learning, and hybrid methods.

2.1. Rule-based Systems

Rule-based systems have been in existence for many years, applying to some tasks involving lane-following, stopping at red lights, and avoiding collisions in autonomous vehicles. These systems rely on a finite set of preformed behavior rules to which the vehicle adheres in terms of sensor inputs. The main advantage of rule-based systems is their simplicity and interpretability: An engineer may easily trace and comprehend the decision-making mechanism. Lin states that in well-understood environments where predictable behavior is expected, rule-based systems work effectively.

Unfortunately, these systems generally cannot handle the complexities of dynamic environments. As Goodall notes, AVs must handle uncertain or unpredictable scenarios, including human drivers executing erratic maneuvers or unexpected pedestrian crossings. A rule system may not set priorities between conflicting objectives: one needs a decision about yielding to pedestrians or carrying on with their driving.

2.2. Machine Learning Approaches

Machine learning algorithms have emerged as an alternative to rule-based systems, allowing AVs to learn from data and make decisions based on patterns rather than predefined rules.

Machine learning can be categorized into two principal types:

Supervised Learning: In supervised learning, models are trained on the labeled datasets consisting of inputs (e.g., sensor data) and the corresponding correct outputs (e.g., actions taken). Zhao demonstrate the use of supervised learning in real-time pedestrian and vehicle detection to enhance AVs' ability to respond to dynamic environments.

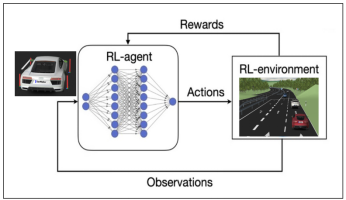

Reinforcement Learning (RL): RL is very promising for AVs, where the vehicle learns optimal decision-making policies by rewarding and penalizing itself based on its actions. Shalev-Shwartz demonstrate how RL can be useful for enhancing AV decision-making in complex traffic scenarios, wherein real-time feedback allows the vehicle to adapt to various road conditions.

The major advantage of machine learning is in its handling of complex and unpredictable environments. However, challenges arise because it requires a large amount of labeled data to train models. Additionally, much of the time, ML models, particularly in deep learning, are seen as black boxes in which it is difficult to tell how decisions are reached.

2.3. Hybrid Systems

A hybrid system merges rule-based and ML systems to garner strengths from both approaches. In the hybrid system, the rule-based logic is typically exploited for relatively well-defined tasks, like obeying traffic signals, while ML takes on the more dynamic or unpredictable scenarios. For example, a hybrid could use rule-based logic for lane keeping and speed control, whereas ML models would be employed for prediction of other traffic participants' behavior. The authors provide an account for the merits of hybrid systems in endowing AVs with robust behavior under various driving scenarios. Hybrid systems are intended to create a compromise between transparency, adapting, and performance. They will always offer more flexibility than the rule-based ones and are more amenable for implementation than the ML only-based.

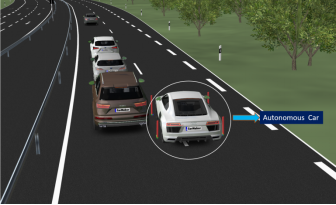

The decision-making of AVs presents challenges, technical or otherwise. In this section, we consider major roadblocks developers must face for AVs' safe and effective operation in complicated settings. Ensuring safety is perhaps the most fundamental challenge of AV decision-making. In an emergency, an AV must always assess risk and make a decision that minimizes damage.

Goodall states that for these scenarios there will have to be sufficient algorithms for risk assessment to be incorporated into the decision-making of AVs. These will weigh possible consequences of alternative decisions, which should include parameters such as speed, distance, and road condition, to select the one least harmful.

3.1 Safety Concerns and Risk Assessment

Safety is deemed the top-priority aspect in autonomous vehicle (AV) decision-making, particularly in cases where accidents become unavoidable. Risk assessment is the ability of the AV to determine potential consequences of different actions taken on-the-spot and choose the least harmful or safest of those alternatives.

For instance, when an AV encounters an unexpected pedestrian crossing a busy street, it should consider all parameters that contribute to avoiding a collision, including speed, distance, and the environment of the road. Goodall reiterates that a significant challenge in this decision-making process arises from the fact that no universally accepted set of ethical guidelines is available [64].

Figure 1. Risk Assessment in Autonomous Vehicles

Due to traffic being unpredictable and highly dynamic in real-world scenarios, risk assessment becomes much more complicated. A model that operates solely on past data may not be able to accommodate situations that deviate from past patterns. Thus, Müller and Gogoll and stress the need for dynamic models for risk assessment to account for the present context and awareness of change in environment over time.

3.2. Ethical and Moral Dilemmas

An important challenge within AV decision-making is how to decide on ethical conflicts, namely in situations where harm is unavoidable. The trolley-problem scenario forms the core of great ethical debate in autonomous driving, questioning whether an AV would save its passengers foremost or minimize harm to pedestrians during an accident unavoidable for the AV.

The ethical decision-making involved within AVs will determine officially which remarks concur with values, such as the minimization of damages, or maximization of fairness within society (according to Figure 2). But this gives rise to a number of issues with value alignment and public trust [65].

Figure 2. Ethical Dilemmas in Autonomous Vehicle Decision-making

There must be ethical frameworks that guide these decisions, as affirmed by Lin [65]. However, these frameworks are not universal since the social view on morality varies, which adds yet another layer of complexity to how AVs should be programmed.

3.3. Real-time Processing and Latency

CVs perform the real-time processing of critical functions mainly for making decisions in time. For AVs, sensor-based data fusion requires simultaneous processing of data dumped to multiple sensors (LIDAR, radar, cameras, etc.) to make decisions concerning vehicle movements. Meeting high latencies in processing such data implies that response times become exceedingly slow, further putting the success of the task of avoiding accidents at risk.

In real-time decision making, latency can become an issue when there are delays in the processing of sensor data, some subsystems interact improperly, or if computational power is lapsed on a timely account. Real-time systems, therefore, ought to be optimized to provide low-latency processing for these applications, which may involve special-purpose hardware or edge computing (local data processing on the vehicle instead of over remote cloud servers).

Latency is reduced using methods like GPU acceleration or parallel processing for speeding the computation. Such optimizations ensure that whenever the AVs need to respond, they do so in real time, with reduced potential for accidents.

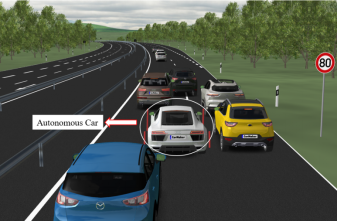

3.4. Complex Urban Environments

The actual manifestations of AV functionalities must deal with very complex scenarios, which come from a tussle with pedestrian behavior, cyclist activity, and other vehicular establishments in an urban setting. City streets and roads are always in a hurry, with lane changes to bump into, pedestrian activity often unpredictable, with varying levels of traffic conditions unlike rural roads or highways.

AVs now incorporate pedestrian and road user behavior- prediction complexities into their very operational limitations. Advanced decision-making systems should simulate several scenarios predicting the state of the environment in the future and the subsequent vehicle behavior.

For example, such AV operation areas are bustling with situations of:

(1) Pedestrians unexpectedly stepping off the curb at intersections

(2) Bicycles dicing through traffic

(3) Unpredictable human driving behavior: sudden lane change or illegal left turn

Creating AVs that can navigate these environments safely requires not only advanced perception and planning algorithms but also predictive models to enable real-time decision-making (as Figure 3) [66].

Figure 3. Urban Navigation Challenges for Autonomous Vehicles

To further strengthen the depth and impact of this review, a more explicit discussion of the evolution of the control subsystem is necessary. This subsystem, which is intrinsically linked to decision-making, is currently underrepresented in the analysis. The paper adequately covers the areas of perception, prediction, planning, and decision logic, but it treats control primarily as an execution layer without delving into its evolving role and significance within the decision-to-action pipeline.

The control subsystem has undergone significant development over the years, transitioning from classical approaches like PID control and model predictive control (MPC) to more advanced, learning-based strategies. A dedicated section that highlights this evolution would not only fill a gap in the current narrative but also provide a more comprehensive view of how decision-making integrates with control systems.

· Classical Control Methods: Initially, control in autonomous systems relied heavily on traditional techniques like PID controllers, which are still commonly used due to their simplicity and effectiveness in many contexts. However, their ability to handle complex, nonlinear, and dynamic environments is limited.

· Model Predictive Control (MPC): As systems grew more sophisticated, MPC emerged as a preferred method, providing more advanced capabilities such as the ability to handle constraints, predict future states, and optimize over a finite time horizon. MPC plays a critical role in ensuring dynamic feasibility and maintaining system stability, which is particularly important in autonomous vehicles where passenger comfort and safety are paramount.

· Learning-Based Control: More recently, there has been a shift toward learning-based control methods. These techniques leverage data-driven models to learn optimal control policies, adapting to a broader range of scenarios. Reinforcement learning (RL), in particular, has gained attention for its potential to optimize control strategies directly through interaction with the environment, potentially overcoming the limitations of model-based methods.

· End-to-End Policy Learning: In parallel, end-to-end policy learning approaches have become increasingly popular, where control and decision-making are learned simultaneously as a single unified model. This allows the system to automatically adapt and optimize both high-level decisions and low-level control actions without relying on hand-crafted models.

· Emerging Trends in Low-Level Control: One of the most exciting trends is the integration of reinforcement learning directly into low-level control systems. By enabling the control layer to adapt and learn from real-time feedback, RL promises to significantly enhance the system’s ability to handle unforeseen events and complex dynamics, such as those encountered in high-speed or highly dynamic environments.

Emphasizing the role of MPC in ensuring dynamic feasibility, maintaining passenger comfort, and promoting safety would add technical depth to the review. Furthermore, discussing how the integration of learning-based methods, including reinforcement learning, is transforming low-level control would contribute to a more forward-looking perspective on the direction of autonomous control systems.

Many emerging technologies will impact the forthcoming decision-making processes in autonomous vehicle systems, strengthening their capabilities, enhancing safety, and appropriately handling existing issues.

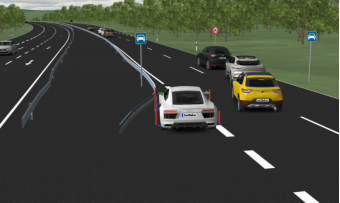

4.1. Deep Reinforcement Learning (DRL)

Deep Reinforcement Learning (DRL) is a very popular topic in autonomous driving, mainly due to its ability to help a vehicle hone its decision-making skills by trial-and-error methods. Unlike the conventional decision-making processes, DRL allows the AVs to improve their behavior based on the interactions with the environment, thereby improving their decision processes gradually. The DRL can possibly change the way AVs deal with complex driving situations. DRL enables AVs to learn from simulated environments and optimal decision-making under unpredictable situations. Therefore, in the long run, this could also help AVs to cope with a new denomination of driving environments without being retaught or rewritten.

While DRL could serve as a viable option through which AVs can deal with a highly dynamic environment, such as urban traffic, by predicting other vehicles' or pedestrians' actions and modifying their behavior accordingly, the difficulties lie with the need for tremendous amounts of training data and computational power, in addition to ensuring safety during the training (Figure 4) [67].

Figure 4. Deep Reinforcement Learning in Autonomous Vehicles

4.2. Explainable AI (XAI)

Autonomous vehicles have grown complex with machine learning algorithms for their decision-making processes, thus developing a need for XAI. Explainable Artificial Intelligence provides interpretations that explain the rationale behind the AV decision-making processes in a manner understandable to humans. It is of utmost importance in the instances where an AV must make a safety-critical decision, like avoiding collision or proceeding through complex traffic scenarios. Developers, regulators, and the public must trust the soundness of decisions made by AVs, and that those decisions are made based on safety protocols. By shedding light on why a certain decision has been made, XAI could help engineers make it easier to debug and enhance the decision-making algorithms. This increases public acceptance of the AVs and helps ensure that AI systems are in tune with human values [68].

4.3. Cooperative Decision-making (Vehicle-to-Vehicle and Vehicle-to-Infrastructure Communication)

Decision-making cooperation represents the next stage of AVs when vehicles will be communicating among themselves (vehicle-to-vehicle or V2V) and with infrastructure systems (vehicle-to-infrastructure or V2I). In this way, AVs will be able to share real-time data about their positions, speeds, and intentions. By cooperating, AVs can coordinate their actions, creating better flow and safety in traffic. For instance, an AV that approaches an intersection may receive data from nearby vehicles and alter its course to avoid a collision. AVs may also communicate relevant information with traffic lights or road sensors in order to optimize their speed and lower congestion (Figure 5) [69].

Figure 5. Cooperative Decision-making in AVs

In the future of decision-making for autonomous vehicles, co-operative systems would be requisite for enhancing not just traffic safety but also efficiency, especially in urban clutter.

The ongoing shift between modular pipelined architectures and end-to-end learning approaches represents a significant evolution in the design of autonomous systems, and this transition merits a more detailed exploration. Traditionally, most autonomous systems have relied on modular designs, where each component—such as perception, prediction, planning, decision-making, and control—operates as a distinct layer. This modular approach is favored for its transparency, allowing each subsystem to be independently analyzed, tested, and validated, which is crucial for safety certification and regulatory compliance. In addition, modularity makes the system more interpretable, simplifying debugging and tuning of individual components. However, while these systems offer safety, stability, and clear validation pathways, they often fail to fully optimize performance across the entire system, as the subsystems are typically designed to function independently without considering cross-layer interactions in a holistic manner. On the other hand, end-to-end learning systems, which directly map raw sensor inputs to control outputs through deep learning models, promise to overcome these limitations. By optimizing the entire decision-to-action pipeline in a unified manner, end-to-end systems can achieve superior efficiency and performance, as they enable the model to learn complex interactions between all components simultaneously. This approach eliminates the need for hand-crafted features and rule-based decision-making, which are common in traditional modular systems. Prominent examples, such as Tesla’s Autopilot, highlight the potential of this architecture to reduce latency and improve real-time decision-making. However, despite their promise, end-to-end systems introduce challenges related to interpretability and verification. Given the black-box nature of deep learning models, understanding the reasoning behind decisions made by these systems becomes difficult, raising concerns around safety, reliability, and accountability—especially in safety-critical applications like autonomous vehicles. Additionally, regulatory approval becomes more complicated, as the lack of transparency hinders traditional safety certification processes, which often rely on exhaustive testing and formal verification of each system component. As a solution, hybrid approaches are gaining traction, combining the strengths of both paradigms. These systems could maintain the modularity of critical components, such as perception and planning, while leveraging end-to-end learning for tasks that benefit from holistic optimization, such as decision-making and control. This hybrid architecture enables the best of both worlds: the safety and transparency provided by the modular components and the performance optimization achieved through end-to-end learning. Furthermore, hybrid systems allow for iterative improvements to be made independently to either module or learning-based components, facilitating system upgrades without the need for a complete overhaul. By balancing the need for safety certification with the desire for high performance, hybrid architectures may provide the most practical and future-proof solution for autonomous systems, particularly in contexts where both safety and efficiency are paramount.

The computational aspects of real-time decision-making are critical to the successful deployment of autonomous vehicles (AVs), particularly as they rely on advanced edge computing and deep reinforcement learning (DRL) to process data from multiple sensors in real-time. Processing latency is one of the key challenges, as AVs must make quick, reliable decisions in complex and dynamic environments. Even small delays in data processing can lead to unsafe outcomes, particularly in critical scenarios such as collision avoidance. To mitigate latency, modern AV systems are increasingly leveraging hardware acceleration, such as Graphics Processing Units (GPUs) and Tensor Processing Units (TPUs), which enable faster computation of complex algorithms like DRL in real time. However, the performance of these systems is often constrained by hardware limitations. Many AVs rely on edge computing, where the computational resources are located closer to the vehicle, reducing the need for cloud communication and enabling faster decision-making. While edge computing offers significant benefits, it also introduces challenges in terms of processing power, memory, and bandwidth. Current hardware, including the aforementioned GPUs and specialized chips, may face bottlenecks when running large-scale DRL models or when handling complex sensor data, such as lidar, radar, and camera feeds, in parallel.

Moreover, energy efficiency is a growing concern, particularly as autonomous vehicles rely on battery power for propulsion. The high computational demands of DRL algorithms can lead to increased energy consumption, which affects the overall efficiency of AV systems. To address this, researchers are exploring low-power computing architectures and energy-efficient algorithms designed to optimize the balance between computational performance and energy use. Future advancements in hardware design, such as the development of energy-efficient processors for autonomous systems, will be crucial in ensuring that AVs remain sustainable and can operate over long periods without excessive battery depletion.

Decision-making in real-world deployment of autonomous vehicles is understood through some major case studies carried out by leading companies in the AV realm. These case studies depict how various AVs tackle complex decision-making scenarios and the technologies they utilize.

5.1. Waymo (Google)

Waymo, the world's one of the first companies in autonomous driving technology, has been testing and deploying self-driving vehicles for quite some time. Their decision-making is an amalgamation of various layers of machine learning, computer vision, and sensor fusion. They utilize LIDAR, radar, and cameras to perceive and comprehend their environment, subsequently applying decision-making and path planning via deep neural networks.

Waymo decision-making algorithms address numerous driving scenarios ranging from ordinary streets to highways. One distinctive aspect of Waymo's approach involves simulated driving: within this setup, the vehicle can test its decision-making algorithms against thousands of real-world driving scenarios before such scenarios are faced in real traffic [70].

Waymo autonomous vehicles have demonstrated an impressive safety record through the machine learning process since they keep learning and adapting to real-world data. However, Waymo has battled with unpredictable human behavior in the urban environment, which brought the need for a model for predicting the actions of other drivers on the road.

5.2. Tesla Autopilot

Equipped with decision-making algorithms, Tesla’s Autopilot system is a great example of autonomous driving technology. These vehicles combine sensors, cameras, and radar to perceive their environment. Unlike Waymo, Tesla do not depend on LIDAR, making its system less expensive but facing big hurdles with respect to distant obstacle detection and poor visibility conditions.

Tesla’s decision-making algorithms tap into supervised and reinforcement learning so that the vehicle can make safe driving decisions based on patterns learned from thousands of miles of real-world driving experience. The ability to anticipate the behavior of other drivers represents a unique characteristic of Tesla's system, enabling rapid reaction in real-time to sudden lane changes or maneuvers by other vehicles [71].

Edge cases and legal roadblocks over safety are some of the challenges that Tesla is facing. For example, the system has shown difficulties with object distinction under certain conditions while on the roadway. The company nevertheless updates its algorithms and decision-making frameworks from data gathered by its humongous fleet of cars.

5.3. Uber ATG (Advanced Technologies Group)

ATG has been focusing on decision-making in context with V-V (vehicle to vehicle) and V-I (vehicle to infrastructure) communication. With machine learning, computer vision, and sensor fusion combined, the decision-making process was all the more effective. The system was capable of detecting objects and obstacles from a long-distance range while making decisions for braking or for changing lanes. And it did this by integrating data from surrounding vehicles and infrastructure, such as traffic signals. Uber self-driving cars would work comparatively well in highway situations, but the problems would come about once they started using city streets. This challenge was largely due to unpredictability in human actions requiring complex decision algorithm[s] to address unforeseen actions.

Eventually, Uber terminated its autonomous vehicle program following the fatal pedestrian accident in 2018. Nevertheless, vital lessons were learned from this incident that would consequently provide valuable input for further enhancing safety protocols, risk assessments, and decision-making in the context of autonomous vehicles [72].

Increasingly, however, regulators and policy-makers are beginning to turn their attention to what rules will govern AV decision-making, especially in high-stakes or emergency situations, as the technology that underpins autonomous driving continues to advance. This area of work thus finds itself increasingly caught up in the swirl of the rapidly changing ethical and regulatory environment concerning AV decision-making.

6.1. Legal Challenges and Liability

A serious issue concerning autonomous vehicle (AV) decision-making is the responsibility in the event of accidents. As they function ideally in an automatic fashion, a question arises on whether the responsibility should rest upon the manufacturer, the one who developed the decision-making algorithms, or the owner of the vehicle. Recent incidents, such as the Uber crash, have heightened the public observation with respect to decision-making by AVs. Governments are now starting to look at ways to hold the manufacturers accountable for the decisions made by their vehicles. According to Lin [6], the clarity of liability will reassure the public and enhance confidence in AVs' operations and further advancements [73]. The legal aspects of AV decision-making have been a matter for discussion for quite some time, and different countries have taken different approaches. For instance, autonomous vehicle certification and testing are being handled under distinct sets of guidelines in the European Union and the United States.

6.2. Ethical Guidelines for Decision-making

For instance, in an unavoidable accident, should an AV protect the safety of vehicle occupants (the utilitarian perspective) or obey moral principles (the deontological perspective) and minimize harm to other road users, Guidelines are necessary in making AVs choose decisions compatible with society's values. Their challenge lies in providing a clear definition for these values, which often differ from culture to culture. The programming AVs to act according to ethical principles generally accepted by the society might be the solution, although no universally accepted ethical framework for developing AVs exists yet. As AVs become more integrated into society, these ethical considerations will continue to evolve, and new guidelines and standards will emerge to see to these considerations.

Another crucial area for enhancement in this paper is the discussion on simulation and digital twin technologies, which have become indispensable tools in the development and validation of autonomous decision-making systems. As the complexity of AI-driven systems increases, these technologies provide a safe and scalable environment for testing algorithms, particularly in scenarios that are too rare, risky, or difficult to recreate in real-world settings. High-fidelity simulators, such as CARLA and NVIDIA DRIVE Sim, have revolutionized the way autonomous systems are trained and tested. These platforms create virtual environments that accurately simulate urban traffic, weather conditions, and other real-world dynamics, enabling the testing of autonomous driving policies under diverse and extreme conditions. Through these simulators, developers can expose their systems to corner cases—like sudden pedestrian crossings or aggressive driver behavior—that might be challenging or unsafe to replicate in physical tests. This capability not only accelerates development but also improves the safety and reliability of autonomous systems by identifying potential failures before they occur on the road. Despite these advantages, the sim-to-real transfer problem remains a significant challenge. While simulators can provide highly detailed and controlled testing environments, the gap between virtual and real-world performance can sometimes be substantial. Factors like sensor noise, real-time unpredictability, and physical system dynamics often cause a mismatch between how the system behaves in simulation versus how it performs in the real world. This is particularly true when it comes to machine learning models that are trained in simulators but must be adapted to handle the stochastic nature of real-world environments. Researchers are working on techniques like domain adaptation and sim-to-real reinforcement learning to mitigate these discrepancies, but challenges remain in ensuring that policies developed in simulation are transferable and reliable when deployed in real-world settings. Expanding on the role of high-fidelity simulators in the development lifecycle, as well as addressing the sim-to-real transfer challenges, would significantly enrich the future outlook section of the paper. It would provide a more holistic view of how these tools are shaping the next generation of autonomous systems, offering both opportunities and obstacles that will need to be addressed to ensure robust and scalable deployment in real-world scenarios.

The paper would benefit from discussing how China’s centralized, top-down approach to AV regulation contrasts with the more incremental and sometimes contradictory frameworks found in other regions. While European Union regulations are advancing, they are often cautious, focusing on safety, liability, and public acceptance. Similarly, the regulatory environment in the United States remains fragmented, with state-level laws and federal guidelines frequently out of step with technological advancements. By comparing these differences, the review would better reflect the geopolitical diversity in AV deployment strategies and highlight how different countries' regulatory approaches—whether top-down, government-led (as in China) or bottom-up, industry-driven (as seen in many Western countries)—are shaping the future of autonomous mobility.

Including a broader, global perspective would not only enrich the paper but also provide a more nuanced understanding of how regulatory strategies are evolving worldwide and the impact this has on the development and deployment of autonomous vehicles across different regions.

Once widespread deployment of autonomous vehicles (AVs) begins, their success or failure in real-world settings will hinge on the continued development of their decision-making capabilities. Achieving AV technologies that are not only safe but also aligned with human values will require the collaboration of diverse interdisciplinary teams—engineers, ethicists, policymakers, and the public. To ensure that AV decision-making is safe, efficient, and fair, algorithms must evolve to address not only technical challenges but also ethical and regulatory concerns. One of the next critical steps in granting AVs full autonomy will be equipping them with systems capable of navigating both complex traffic environments and situations that involve ethical or societal dilemmas. In the short term, achieving improved explainability and robustness in decision-making—particularly in mixed traffic scenarios where AVs interact with human-driven vehicles—will be paramount. This will involve enhancing explainable AI (XAI) methods and improving the transparency of AV decision processes, ensuring that both regulatory bodies and the public can trust and understand how these systems operate. Mid-term objectives will focus on advancing Level 4 autonomy in urban environments, where cooperative perception systems that integrate V2V (Vehicle-to-Vehicle) and V2I (Vehicle-to-Infrastructure) communication will be crucial. These advancements will allow AVs to operate safely and efficiently in dense, dynamic settings while sharing information with both other vehicles and the surrounding infrastructure. Long-term goals will involve the development of large-scale multi-agent coordination systems, enabling AVs to seamlessly work together within a smart city ecosystem. Achieving value-aligned AI in the context of AVs will be essential, ensuring that AVs make decisions that reflect societal values and ethical standards, not just technical efficiency. Such systems will require continual learning, robust ethical frameworks, and highly advanced decision-making architectures that can adapt to unforeseen challenges.

In conclusion, while autonomous vehicles will play a pivotal role in building safer, more efficient transportation ecosystems, achieving this future vision will require ongoing research and technological innovation. By addressing the technical, ethical, and legal challenges discussed in this paper, and through strategic, actionable research goals, the full potential of autonomous vehicle decision-making can be realized. Continued development in areas like deep reinforcement learning (DRL), V2X communication, and ethically grounded AI will pave the way for a future where AVs not only enhance transportation safety but also contribute to broader societal goals. Autonomous vehicle decision-making remains a central factor in the realization of this future, and further research will be essential to overcoming the challenges that lie ahead.

Author Contributions: All the work was contributed by Ali Rizehvandi.

Funding: None.

Ethical Approval: Not applicable.

Informed Consent Statement: Not applicable.

Data Availability Statement: Not applicable.

Acknowledgments: Not applicable.

Conflicts of Interest: The authors declare no conflicts of interest.

Thrun, S. Toward robotic cars. Commun. ACM 2010, 53(4), 99–106.

Wang, Y.; Jiang, J.; Li, S.; Li, R.; Xu, S.; Wang, J.; Li, K. Decision-making driven by driver intelligence and environment reasoning for high-level autonomous vehicles: a survey. IEEE Trans. Intell. Transp. Syst. 2023, 24(10), 10362–10381.

Ziegler, J.; Bender, P.; Schreiber, M.; Lategahn, H.; Strauss, T.; Stiller, C.; Dang, T.; Franke, U.; Appenrodt, N.; Keller, C. G.; Kaus, E.; Herrtwich, R. G.; Rabe, C.; Pfeiffer, D.; Lindner, F.; Stein, F.; Erbs, F.; Enzweiler, M.; Knöppel, C.; Hipp, J.; Haueis, M.; Trepte, M.; Brenk, C.; Tamke, A.; Ghanaat, M.; Braun, M.; Joos, A.; Fritz, H.; Mock, H.; Hein, M.; Zeeb, E. Making bertha drive—an autonomous journey on a historic route. IEEE Intell. Transp. Syst. Mag. 2014, 6(2), 8–20.

Urmson, C.; Anhalt, J.; Bagnell, D.; Baker, C.; Bittner, R.; Clark, M. N.; Dolan, J.; Duggins, D.; Galatali, T.; Geyer, C.; Gittleman, M.; Harbaugh, S.; Hebert, M.; Howard, T. M.; Kolski, S.; Kelly, A.; Likhachev, M.; McNaughton, M.; Miller, N.; Peterson, K.; Pilnick, B.; Rajkumar, R.; Rybski, P.; Salesky, B.; Seo, Y.-W.; Singh, S.; Snider, J.; Stentz, A.; Whittaker, W. R.; Wolkowicki, Z.; Ziglar, J.; Bae, H.; Brown, T.; Demitrish, D.; Litkouhi, B.; Nickolaou, J.; Sadekar, V.; Zhang, W.; Struble, J.; Taylor, M.; Darms, M.; Ferguson, D. Autonomous driving in urban environments: Boss and the urban challenge. J. Field Robot. 2008, 25(8), 425–466.

Winner, H.; Hakuli, S.; Lotz, F.; Singer, C. (Eds.) Handbook of Driver Assistance Systems; Springer International Publishing, 2014; pp. 405–430.

Yuan, K.; Huang, Y.; Yang, S.; Wu, M.; Cao, D.; Chen, Q.; Chen, H. Evolutionary decision-making and planning for autonomous driving: A hybrid augmented intelligence framework. IEEE Trans. Intell. Transp. Syst. 2024, 25(7), 7339–7351.

Kim, S.W.; Liu, W.; Ang, M.H.; Frazzoli, E.; Rus, D. The impact of cooperative perception on decision making and planning of autonomous vehicles. IEEE Intell. Transp. Syst. Mag. 2015, 7(3), 39–50.

Koopman, P.; Wagner, M. Autonomous vehicle safety: An interdisciplinary challenge. IEEE Intell. Transp. Syst. Mag. 2017, 9(1), 90–96.

Åström, K.J.; Murray, R.M. Feedback Systems: An Introduction for Scientists and Engineers; Princeton University Press, 2010.

Pomerleau, D.A. Alvinn: An autonomous land vehicle in a neural network. Adv. Neural Inf. Process. Syst. 1989, 1, 305–313.

Thorpe, C.; Herbert, M.; Kanade, T.; Shafer, S. Toward Autonomous Driving: The CMU Navlab. Part I: Perception. IEEE Expert 1991, 6(4), 31–42.

Dickmanns, E.D. Dynamic Vision for Perception and Control of Motion; Springer London: London, 2007.

Williams, M. PROMETHEUS-The European research programme for optimising the road transport system in Europe. In IEE Colloquium on Driver Information; IET, 1988.

Paden, B.; Čáp, M.; Yong, S.Z.; Yershov, D.; Frazzoli, E. A survey of motion planning and control techniques for self-driving urban vehicles. IEEE Trans. Intell. Veh. 2016, 1(1), 33–55.

Dissanayake, M.G.; Newman, P.; Clark, S.; Durrant-Whyte, H.F.; Csorba, M. A solution to the simultaneous localization and map building (SLAM) problem. IEEE Trans. Robot. Autom. 2001, 17(3), 229–241.

Levinson, J.; Askeland, J.; Becker, J.; Dolson, J.; Held, D.; Kammel, S.; Kolter, J. Z.; Langer, D.; Pink, O.; Pratt, V.; Sokolsky, M.; Stanek, G.; Stavens, D.; Teichman, A.; Werling, M.; Thrun, S. Towards fully autonomous driving: Systems and algorithms. In Proceedings of the 2011 IEEE Intelligent Vehicles Symposium, Baden-Baden, Germany, 5-9 June 2011; pp. 163–168.

Aksjonov, A.; Kyrki, V. Rule-based decision-making system for autonomous vehicles at intersections with mixed traffic environment. In Proceedings of the 2021 IEEE International Intelligent Transportation Systems Conference (ITSC), Indianapolis, IN, USA, 19-22 September 2021; pp. 660–666.

Poupart, P. Partially observable Markov decision processes. In Encyclopedia of Machine Learning and Data Mining; Springer: Boston, MA, USA, 2017; pp. 959–966.

Brechtel, S.; Gindele, T.; Dillmann, R. Probabilistic decision-making under uncertainty for autonomous driving using continuous POMDPs. In Proceedings of the 17th International IEEE Conference on Intelligent Transportation Systems (ITSC), Qingdao, China, 8-11 October 2014; pp. 392–399.

Puterman, M.L. Markov Decision Processes: Discrete Stochastic Dynamic Programming; John Wiley & Sons, 2014.

Vahidi, A.; Eskandarian, A. Research advances in intelligent collision avoidance and adaptive cruise control. IEEE Trans. Intell. Transp. Syst. 2004, 4(3), 143–153.

Kolter, J.; Abbeel, P.; Ng, A. Hierarchical apprenticeship learning with application to quadruped locomotion. Adv. Neural Inf. Process. Syst. 2007, 20.

Rizehvandi, A.; Azadi, S.; Eichberger, A. Decision-Making Policy for Autonomous Vehicles on Highways Using Deep Reinforcement Learning (DRL) Method. Automation 2024, 5(4), 564.

Grisetti, G.; Kümmerle, R.; Stachniss, C.; Burgard, W. A tutorial on graph-based SLAM. IEEE Intell. Transp. Syst. Mag. 2010, 2(4), 31–43.

Moosmann, F.; Pink, O.; Stiller, C. Segmentation of 3D lidar data in non-flat urban environments using a local convexity criterion. In Proceedings of the 2009 IEEE Intelligent Vehicles Symposium, Xi'an, China, 3-5 June 2009; pp. 215–220.

Chen, X.; Ma, H.; Wan, J.; Li, B.; Xia, T. Multi-view 3d object detection network for autonomous driving. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21-26 July 2017; pp. 6526–6534.

Haralick, R.M. Statistical and structural approaches to texture. Proc. IEEE 1979, 67(5), 786–804.

Bar-Shalom, Y.; Li, X.R.; Kirubarajan, T. Estimation with Applications to Tracking and Navigation: Theory Algorithms and Software; John Wiley & Sons, 2001.

Winner, H.; Hakuli, S.; Lotz, F.; Singer, C. (Eds.) Handbook of Driver Assistance Systems; Springer International Publishing: Amsterdam, The Netherlands, 2014; pp. 405–430.

Thrun, S.; Montemerlo, M.; Dahlkamp, H.; Stavens, D.; Aron, A.; Diebel, J.; Fong, P.; Gale, J.; Halpenny, M.; Hoffmann, G.; Lau, K.; Oakley, C.; Palatucci, M.; Pratt, V.; Stang, P.; Strohband, S.; Dupont, C.; Jendrossek, L.-E.; Koelen, C.; Markey, C.; Rummel, C.; van Niekerk, J.; Jensen, E.; Alessandrini, P.; Bradski, G.; Davies, B.; Ettinger, S.; Kaehler, A.; Nefian, A.; Mahoney, P. Stanley: The robot that won the DARPA Grand Challenge. J. Field Robot. 2006, 23(9), 661–692.

Bertsekas, D. Dynamic Programming and Optimal Control: Volume I, 4th ed.; Athena Scientific: Belmont, MA, USA, 2012; Volume 4.

Krizhevsky, A.; Sutskever, I.; Hinton, G.E. ImageNet classification with deep convolutional neural networks. Commun. ACM 2017, 60(6), 84–90.

Heaton, J. Ian goodfellow, yoshua bengio, and aaron courville: Deep learning: The mit press, 2016, 800 pp, isbn: 0262035618. Genet. Program. Evolvable Mach. 2018, 19(1), 305–307.

Silver, D.; Huang, A.; Maddison, C.J.; Guez, A.; Sifre, L.; van den Driessche, G.; Schrittwieser, J.; Antonoglou, I.; Panneershelvam, V.; Lanctot, M.; Dieleman, S.; Grewe, D.; Nham, J.; Kalchbrenner, N.; Sutskever, I.; Lillicrap, T.; Leach, M.; Kavukcuoglu, K.; Graepel, T.; Hassabis, D. Mastering the game of Go with deep neural networks and tree search. Nature 2016, 529(7587), 484–489.

Chen, C.; Seff, A.; Kornhauser, A.; Xiao, J. Deepdriving: Learning affordance for direct perception in autonomous driving. In Proceedings of the 2015 IEEE International Conference on Computer Vision, Santiago, Chile, 7-13 December 2015; pp. 2722–2730.

Bojarski, M.; Del Testa, D.; Dworakowski, D.; Firner, B.; Flepp, B.; Goyal, P.; Jackel, L.D.; Monfort, M.; Muller, U.; Zhang, J.; Zhang, X.; Zhao, J.; Zieba, K. End to end learning for self-driving cars. arXiv 2016, arXiv:1604.07316.

Rizehvandi, A.; Azadi, S. Design of a Path-Following Controller for Autonomous Vehicles Using an Optimized Deep Deterministic Policy Gradient Method. Int. J. Automot. Mech. Eng. 2024, 21(3), 11682–11694.

Treiber, M.; Kesting, A. Traffic Flow Dynamics; Springer: Berlin, Germany, 2013; Volume 1.

Urmson, C.; Anhalt, J.; Bagnell, D.; Baker, C.; Bittner, R.; Clark, M.N.; Dolan, J.M.; Duggins, D.; Galatali, T.; Geyer, C.; Gittleman, M.; Harbaugh, S.; Hebert, M.; Howard, T.M.; Kolski, S.; Kelly, A.; Likhachev, M.; Mcnaughton, M.; Miller, N.; Peterson, K.; Pilnick, B.; Rajkumar, R.; Rybski, P.; Salesky, B.; Seo, Y.-W.; Singh, S.; Snider, J.; Stentz, A.; Whittaker, W.; Wolkowicki, Z.; Ziglar, J.; Bae, H.; Brown, T.; Demitrish, D.; Litkouhi, B.; Nickolaou, J.; Sadekar, V.; Zhang, W.; Struble, J.; Taylor, M.; Darms, M.; Ferguson, D. Autonomous driving in urban environments: Boss and the urban challenge. J. Field Robot. 2008, 25(8), 425–466.

Althoff, M.; Dolan, J.M. Online verification of automated road vehicles using reachability analysis. IEEE Trans. Robot. 2014, 30(4), 903–918.

Hubmann, C.; Becker, M.; Althoff, D.; Lenz, D.; Stiller, C. Decision making for autonomous driving considering interaction and uncertain prediction of surrounding vehicles. In Proceedings of the 2017 IEEE Intelligent Vehicles Symposium (IV), Los Angeles, CA, USA, 11-14 June 2017; pp. 1671–1678.

Lefèvre, S.; Vasquez, D.; Laugier, C. A survey on motion prediction and risk assessment for intelligent vehicles. ROBOMECH J. 2014, 1(1), 1.

Rizehvandi, A.; Azadi, S. Highway decision-making strategy for autonomous vehicle for overtaking maneuver using deep reinforcement learning (DRL) method. Am. J. Mech. Eng. 2024, 56(4), 595–620.

Zhao, X.; Zhang, Y.; Liu, W. Advancements in Deep Reinforcement Learning for Autonomous Vehicle Decision Making: A Review of Recent Progress. IEEE Trans. Intell. Transp. Syst. 2023, 24(1), 35–48.

Cheng, Q.; Wang, H.; Yang, Z. Real-Time Decision-Making for Autonomous Vehicles Using Edge Computing: Challenges and Opportunities. IEEE Access 2022, 10, 74129–74142.

Sutton, R.S.; Barto, A.G. Reinforcement Learning: An Introduction; MIT Press, 2018; pp. 329–331.

Rizehvandi, A.; Azadi, S.; Eichberger, A. Enhancing Highway Driving: High Automated Vehicle Decision Making in a Complex Multi-Body Simulation Environment. Modelling 2024, 5(3), 951–968.

Chen, Y.; Kamarainen, J.K.; Laaksonen, L.J. Deep reinforcement learning for decision making in autonomous driving. IEEE Access 2019, 7, 82513–82525.

Shalev-Shwartz, S.; Shammah, S.; Shashua, A. Safe, multi-agent, reinforcement learning for autonomous driving. arXiv 2016, arXiv:1610.03295.

Kiran, B.R.; Sobh, I.; Talpaert, V.; Mannion, P.; Al Sallab, A.A.; Yogamani, S.; Pérez, P. Deep reinforcement learning for autonomous driving: A survey. IEEE Trans. Intell. Transp. Syst. 2021, 23(6), 4909–4926.

Dosovitskiy, A.; Ros, G.; Codevilla, F.; Lopez, A.; Koltun, V. CARLA: An open urban driving simulator. In Proceedings of the Conference on Robot Learning, Mountain View, CA, USA, 13-15 November 2017; pp. 1–16.

Bojarski, M.; Del Testa, D.; Dworakowski, D.; Firner, B.; Flepp, B.; Goyal, P.; Jackel, L.D.; Monfort, M.; Muller, U.; Zhang, J.; Zhang, X.; Zhao, J.; Zieba, K. End to end learning for self-driving cars. arXiv 2016, arXiv:1604.07316.

Gros, C.; Kester, L.; Martens, M.; Werkhoven, P. Addressing ethical challenges in automated vehicles: bridging the gap with hybrid AI and augmented utilitarianism. AI Ethics 2025, 5(3), 2757–2770.

Thornton, S.M.; Pan, S.; Erlien, S.M.; Gerdes, J.C. Incorporating ethical considerations into automated vehicle control. IEEE Trans. Intell. Transp. Syst. 2016, 18(6), 1429–1439.

Shalev-Shwartz, S.; Shammah, S.; Shashua, A. On a formal model of safe and scalable self-driving cars. arXiv 2017, arXiv:1708.06374.

Li, X.; Sun, Z.; Cao, D.; He, Z.; Zhu, Q. Real-time trajectory planning for autonomous urban driving: Framework, algorithms, and verifications. IEEE/ASME Trans. Mechatron. 2015, 21(2), 740–753.

Makahleh, H.Y.; Ferranti, E.J.S.; Dissanayake, D. Assessing the role of autonomous vehicles in urban areas: A systematic review of literature. Future Transp. 2024, 4(2), 321–348.

Jain, Y.K.; Sahu, S.; Singh, J.; Maheshwari, A.; Choudhary, D.K.; Bhagwati, M.T. Scalable AI Models for Real-Time Ethical Decision-Making in Autonomous Business Operations. In Proceedings of the 2025 International Conference on Automation and Computation (AUTOCOM), Dehradun, India, 4-6 March 2025; pp. 1503–1508.

Shladover, S.E. Connected and automated vehicle systems: Introduction and overview. J. Intell. Transp. Syst. 2018, 22(3), 190–200.

Kalra, N.; Paddock, S.M. Driving to safety: How many miles of driving would it take to demonstrate autonomous vehicle reliability? Transp. Res. Part A Policy Pract. 2016, 94, 182–193.

Amoozadeh, M.; Raghuramu, A.; Chuah, C.N.; Ghosal, D.; Zhang, H.M.; Rowe, J.; Levitt, K. Security vulnerabilities of connected vehicle streams and their impact on cooperative driving. IEEE Commun. Mag. 2015, 53(6), 126–132.

Wang, J.; Shao, Y.; Ge, Y.; Yu, R. A survey of vehicle to everything (V2X) testing. Sensors 2019, 19(2), 334.

Li, S.; Sun, X.; Zhang, D. Vehicle-to-Everything (V2X) Communication for Autonomous Vehicles: Current Challenges and Future Directions. Sensors 2023, 23(7), 2021–2038.

Wang, D.; Fu, W.; Song, Q.; Zhou, J. Potential risk assessment for safe driving of autonomous vehicles under occluded vision. Sci. Rep. 2022, 12, 4981.

Li, S.; Zhang, J.; Wang, S.; Li, P.; Liao, Y. Ethical and Legal Dilemma of Autonomous Vehicles: Study on Driving Decision-Making Model under the Emergency Situations of Red Light-Running Behaviors. Electronics 2018, 7(10), 264.

Autonomous Vehicles: An opportunity for Cities. Available online: https://urbanmobilitycourses.eu/course

s/autonomous-vehicles-an-opportunity-for-cities/ (accessed on 15 June 2025).

Atakishiyev, S.; Salameh, M.; Yao, H.; Goebel, R. Explainable artificial intelligence for autonomous driving: A comprehensive overview and field guide for future research directions. IEEE Access 2024, 12, 101603–101625.

Muzahid, A.J.M.; Kamarulzaman, S.F.; Rahman, M.A.; Murad, S.A.; Kamal, M.A.S.; Alenezi, A.H. Multiple vehicle cooperation and collision avoidance in automated vehicles: survey and an AI-enabled conceptual framework. Sci. Rep. 2023, 13, 603.

Lenox, M.; McDermott, J. Driving Waymo's fully autonomous future. Personal communication, 2022.

Tesla, Inc. Autopilot: How Tesla's Self-Driving System Works. Available online: https://www.tesla.com/support/

autopilot (accessed on 17 June 2025).

Sonnemaker, T. Uber is reportedly in talks to sell its self-driving vehicle division to a startup run by former Uber, Tesla, and Google executives and backed by Amazon. Bus. Insid. 2020.

Li, Z.; Shi, L.; He, W. A adaptability mechanisms study of traffic liability-division in autonomous vehicle era. Transp. Policy 2025, 171, 566–578.

Villasenor, J. Products Liability and Driverless Cars: Issues and Guiding Principles for Legislation. Brookings Institution, 2014.